Bio

Pengfei Zhang is a PHD student in UC Irvine, with a strong research focus on multimodal large language models (LLMs) on Human-centric multimedia understanding (video and speech) or low resource settings (the cases where the training corporsa are not sufficient). Most of his work centers around adapting large language models on low resource domains in human-centric tasks. His projects maily focus on multimodal large language models (LLMs) on Human-centric visual understanding, action tokenizer, audio and speech recognition and reasoning, and co-speech visual alignment and generation. He also get involved with projects on multimodal LLMs on low-resource languages and cultures and personalized agent on healthcare systems. His resume is presented in Pengfei’s CV.

🎇 NEWS

06/2025: 👏 One paper, [ContextualGesture], is accepted by ACM MM 2025!

06/2025: 👏 One paper, [KinMo], is accepted by ICCV 2025!

06/2025: 👊 Start an Applied Scientist Intern position at Amazon Web Service AI Lab!

06/2025: One paper, [Adaptive LLM Retrieval], is accepted by AMIA 2025!

👀 Research

Multimodal LLMs for low-resource speech and visual understanding and generation

| Jan 2023 - Present | |

|---|---|

| Multimodal LLMs for Human-Centric Video Understanding and Generation aims to bridge human-centric video, audio (speech), and text into a unified semantic space. By action tokenizing and temporal synchronizing across modalities—such as aligning speech with co-speech gestures or visual actions—LLMs can generate coherent, context-aware outputs that reflect human-like perception and behavior. This enables applications like gesture generation, motion synthesis, and audiovisual narration grounded in natural language. |  |

| Publications: | |

|

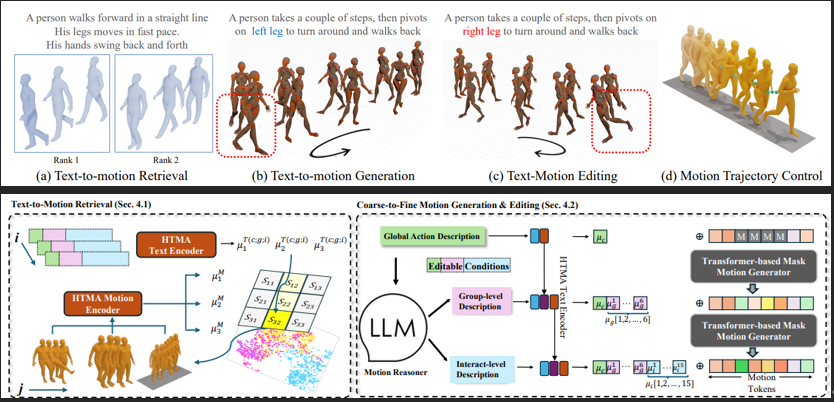

KinMo: Kinematic-aware Human Motion Understanding and Generation. Pengfei Zhang, Pinxin Liu, Pablo Garrido, Hyeongwoo Kim, Bindita Chaudhuri. ICCV 2025 [project] [preprint] [demo] |

|

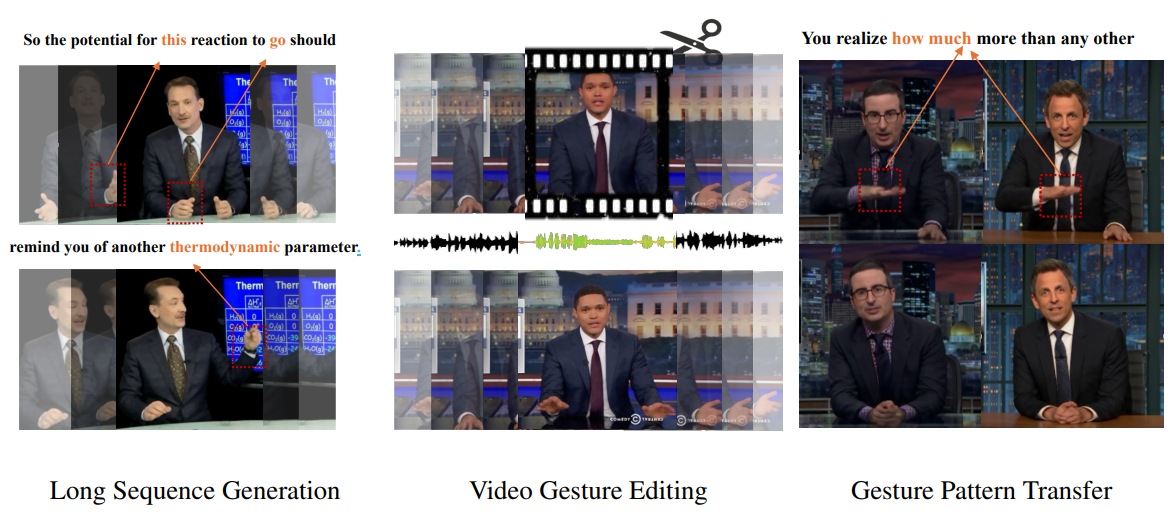

Contextual Gesture: Co-Speech Gesture Video Generation through Semantic-aware Gesture Representation. Pinxin Liu, Pengfei Zhang, Hyeongwoo Kim, Pablo Garrido, Ari Shapiro, Kyle Olszewski. ACM MM 2025 [project] [preprint] |

|

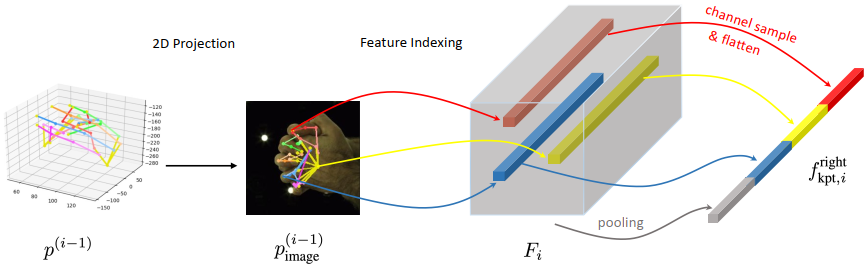

Handformer2T: A Lightweight Regression-based model for Interacting Hands Pose Estimation from a single RGB Image. Pengfei Zhang, Deying Kong. WACV 2024 [paper] |

Note on code release: As this project is done during my Internships, the code is not allowed by the company to be released. However, all details (including implementation details, prompts, dependencies and referred packages) are introduced in the paper. Meanwhile, we are trying our best to release everything that is allowed, including the datasets, demos, and etc. We apologize for the inconvenience. Feel free to contact me for any questions regarding the reproduction.

LLM-enhanced recognition and reasoning on low-resource audios

| Jan 2023 - Present | |

|---|---|

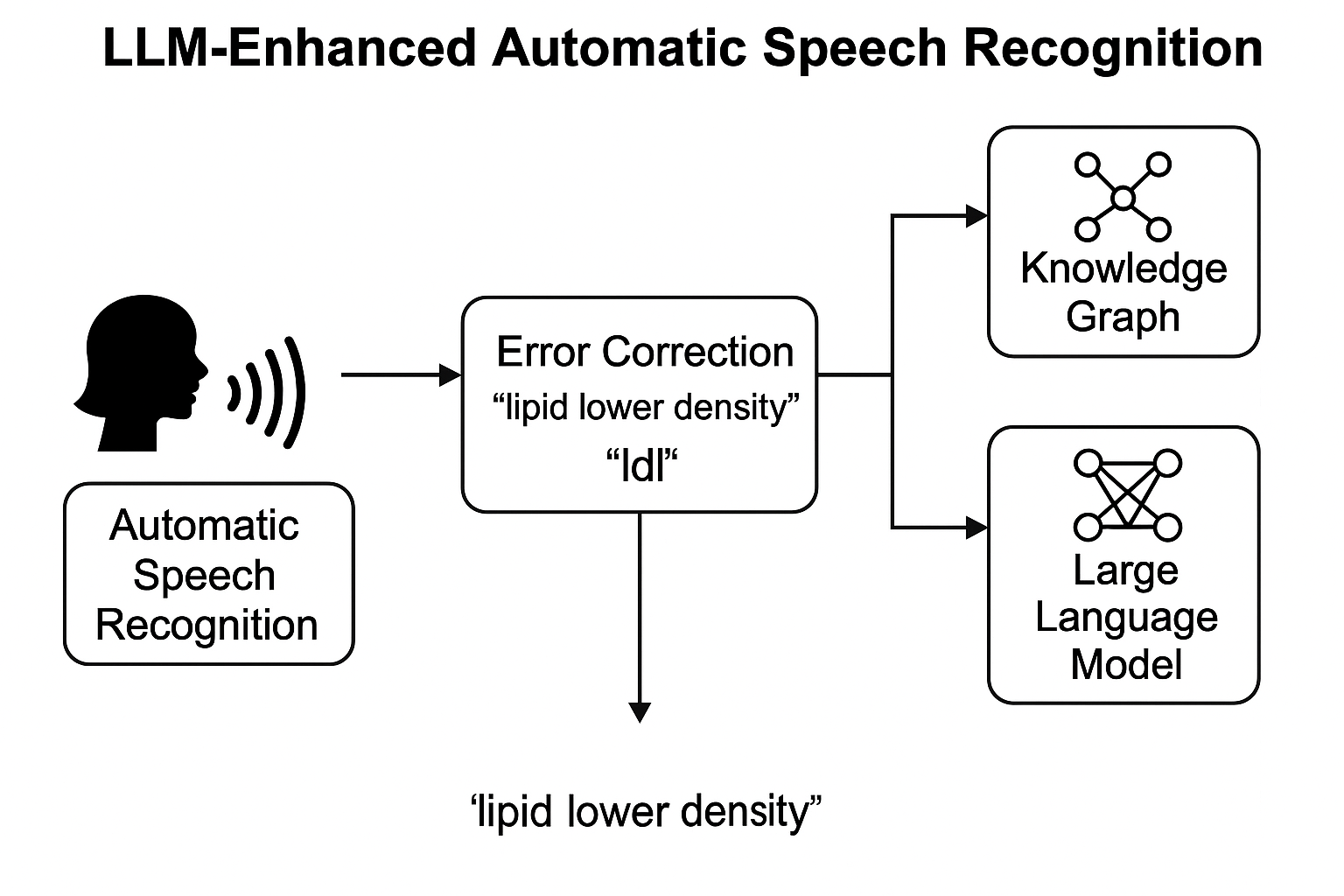

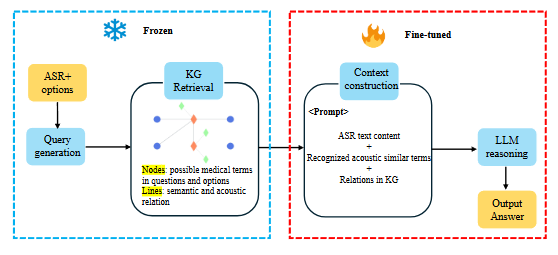

| LLM-enhanced recognition and reasoning on low-resource languages integrates large language models with traditional ASR pipelines to improve transcription accuracy, particularly in domain-specific and noisy scenarios. By leveraging external knowledge sources—such as medical knowledge graphs—and contextual understanding, LLMs can correct recognition errors, disambiguate terms, and enhance spoken question answering. This hybrid approach enables more robust and semantically informed speech understanding in complex applications like healthcare. |  |

| Publications: | |

|

MedSpeak: Knowledge Enhanced ASR Error Correction framework for Spoken Medical Question Answering |

Personalized agent on healthcare systems

| Sep 2021 - Present | |

|---|---|

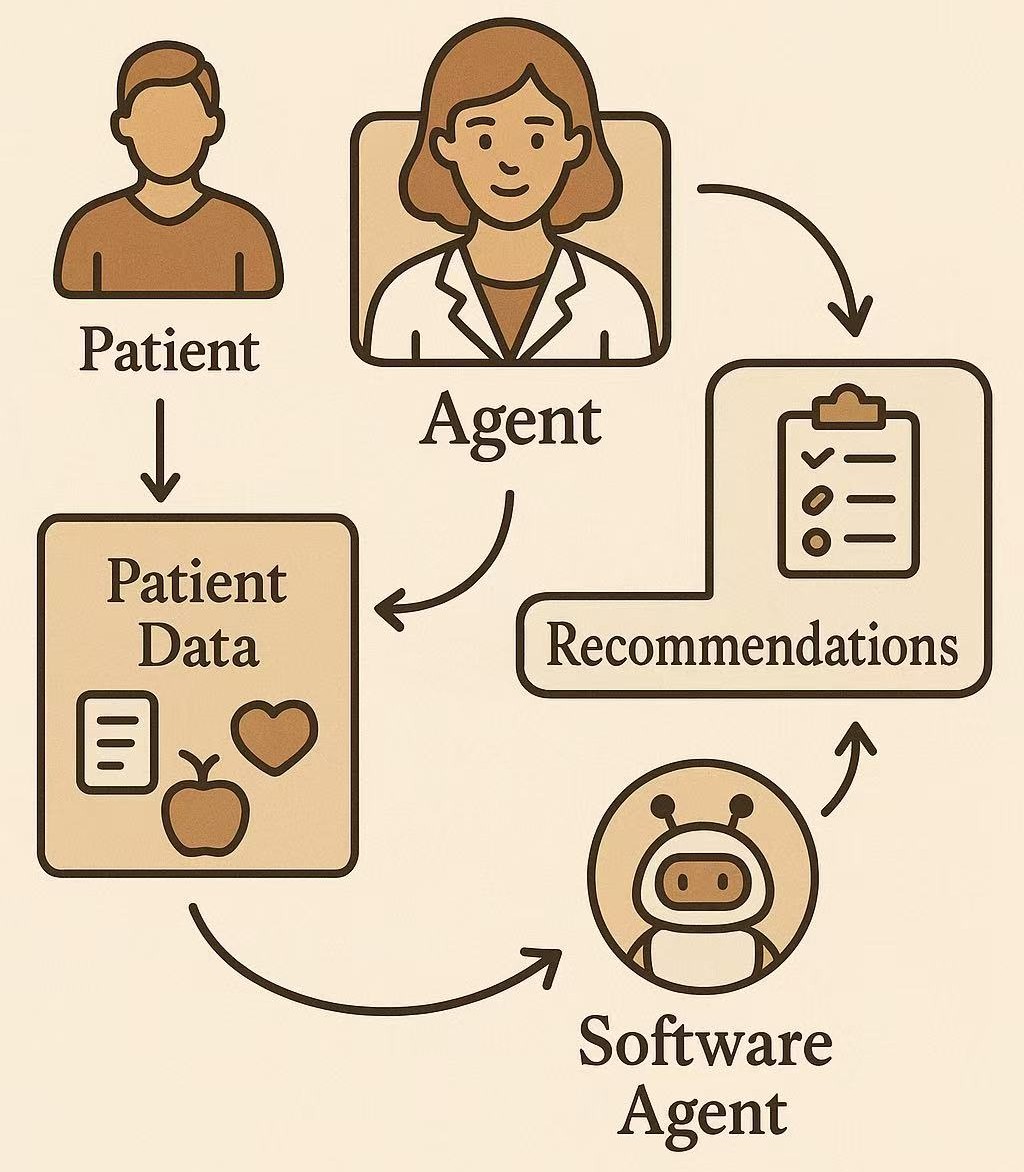

| Personalized agent on healthcare systems leverage autonomous software agents to analyze individual patient data—such as medical history, lifestyle, and preferences—to deliver tailored health advice and treatment options. These systems can interact dynamically with users and other agents (e.g., diagnostic or monitoring tools), adapting recommendations in real time to improve decision-making and patient outcomes. |  |

| Publications: | |

|

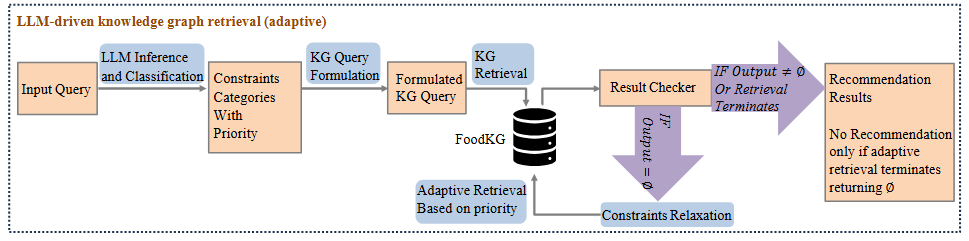

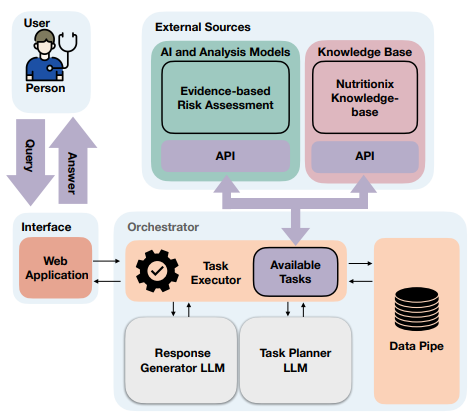

Adaptive Constraint Relaxation in Personalized Nutrition Recommendations: An LLM-Driven Knowledge Graph Retrieval Approach Pengfei Zhang, Mohbat Fnu, Yutong Song, Oshani Seneviratne, Zhongqi Yang, Iman Azimi, Amir M. Rahmani AMIA - American Medical Informatics Association [preprint] |

|

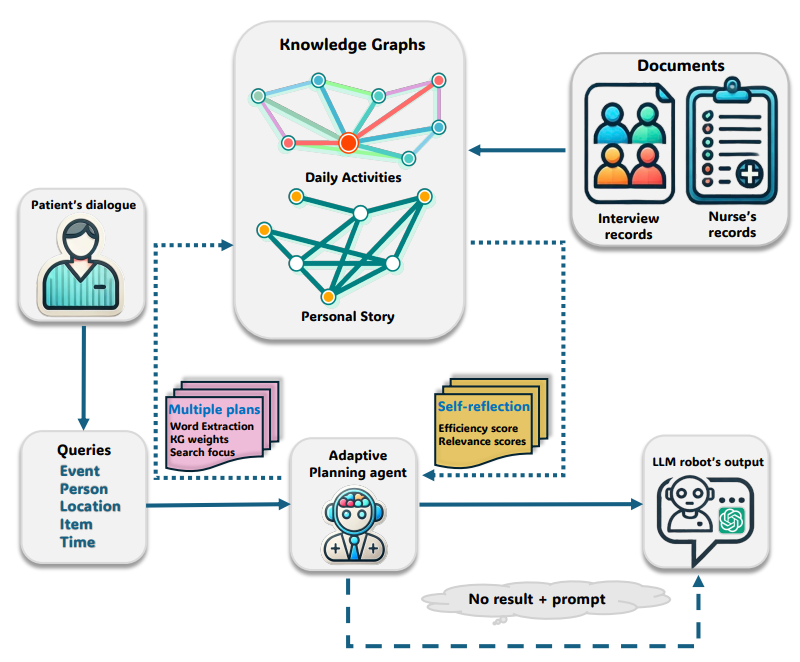

DEMENTIA-PLAN: An Agent-Based Framework for Multi-Knowledge Graph Retrieval-Augmented Generation in Dementia Care. Yutong Song, Chenhan Lyu, Pengfei Zhang, Sabine Brunswicker, Nikil Dutt, Amir M. Rahmani. AAAI 2025 Workshop: Knowledge Graphs for Health Equity, Justice, and Social Services [preprint] |

|

Knowledge-Infused LLM-Powered Conversational Health Agent: A Case Study for Diabetes Patients. Mahyar Abbasian, Zhongqi Yang, Elahe Khatibi, Pengfei Zhang, Nitish Nagesh, Iman Azimi, Ramesh Jain, Amir M. Rahmani. EMBC 2024 [paper] [code] [website] |

|

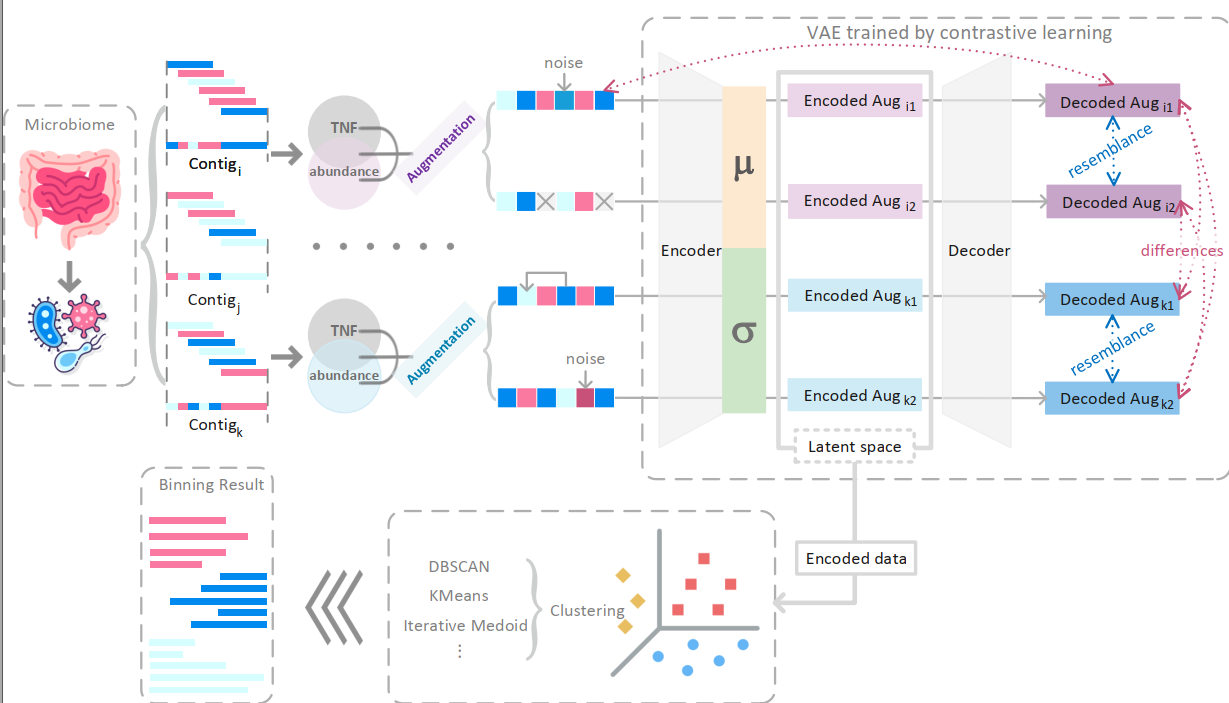

CLMB: deep contrastive learning for robust metagenomic binning Pengfei Zhang, Zhengyuan Jiang, Yixuan Wang, Yu Li. RECOMB 2022 (oral) [paper] [code] [blog] |

💪 Internships

2025 Applied Scientist Intern at Amazon Web Service AI Lab. Location: Santa Clara

2024 Research Science Intern at Flawless. AI. Inc. Location: Los Angeles

2022 Research Intern in the Chinese University of Hong Kong. Location: Hong Kong

📽️ Projects

| Personal Software Programming Projects | |

|---|---|

| [Distributed Chatroom with LLaMa-Powered Summarization] | A Multi-topic Web Chatroom which can provide backup on previous conversations and LLaMa Powered summarizations after each refresh |

🎖️ Awards

Dean’s award from UCI

National Encouragement Scholarship (top 20%) from USTC

National Encouragement Scholarship (top 20%) from USTC